Earlier this week Ned Otter (@NedOtter) brought up a question about Instant File Initialization on SQL Server on Linux, check out the thread here. I was up way too early in the morning, as I normally am, so I decided to poke around and see how it was done. SQL Server pros, here you can see you can get some deep internal information from the OS very easily. Hopefully with this blog post you’ll be able to compare how this is done on Windows and draw the connections between the two platforms and leverage this technique in other areas.

Since this post was published, Bob Dorr of the SQL Server Engineering team also has a post on this topic here.

Let’s check it out…

SQL on Linux Internals

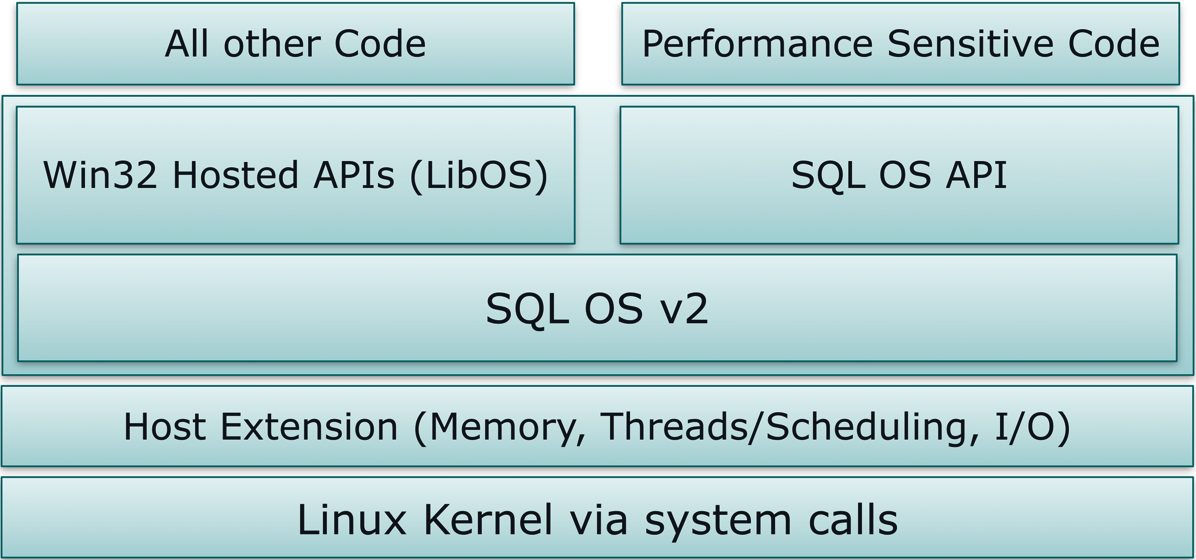

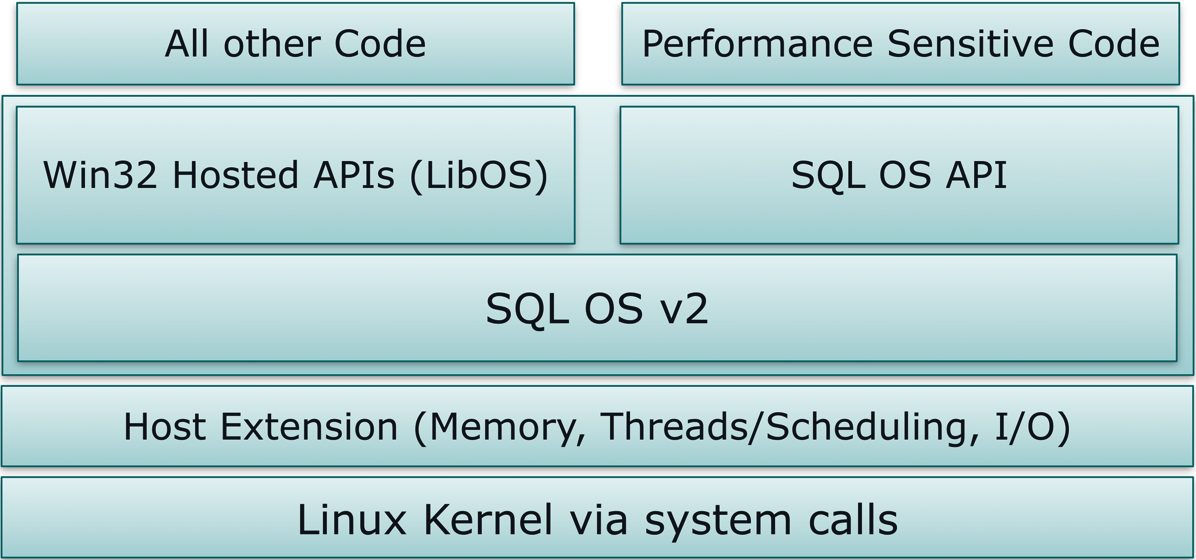

First, the internals of SQL Server on Linux leverage a process virtualization technique called SQLPAL. Inside SQLPAL, is a Win32 environment custom tailored to support SQL Server. Next, SQLPAL needs a way to talk to Linux so that it can access the physical resources of the system and it does this via something called the Host Extensions. Essentially the HE map SQLPAL’s Win32 and SQLOSv2 API calls to Linux system calls. In Linux, system calls provide access to system resources, things like CPU, memory and network or disk I/O. And that’s the flow, SQL Server calls SQLPAL, which calls the Host Extensions, which calls Linux’s system calls to interact with system resources.

Figure 1: SQLPAL

Using strace to Collect System Calls

Knowing this, we can leverage Linux tools to see what SQL Server is doing when it interacts with the operating system. Specifically we can leverage tools like strace to see which systems calls it uses to perform certain tasks and in this case we’re going to look at how SQL on Linux implements Instance File Initialization. So let’s attach strace to our SQL Server on Linux process, sqlservr.

It’s needless to say, do not reproduce this on a system that’s important to you. Tracing will SLOW things down.

Attach strace to your currently running SQL Server process (sqlservr). So let’s start with finding our SQL Server process with ps. There is always two (insert Sith reference) you’re going to want the child process here. Easily identified by the one with the higher process ID. So we’ll pick 1416.

[root@server2 ~]# ps -aux | grep sqlservr

mssql 1414 3.0 0.4 198156 18244 ? Ssl 06:23 0:04 /opt/mssql/bin/sqlservr

mssql 1416 6.3 15.9 1950768 616652 ? Sl 06:23 0:08 /opt/mssql/bin/sqlservr

Let’s walk through the strace parameters here, -t adds a time stamp, -f will attach strace to any threads forked from our traced process and -p is the process we want to trace .

strace -t -f -p 1416 -o new_database.txt

Creating a Database with Instant File Initialization

With

strace up and running let’s turn on the trace flags to enable output for Instant File Initialization and create database that has a 100MB data file and a 100MB log file. Check out this

post from Microsoft for more details on the trace flags. This database create code is straight from their post. I changed the model database’s data and log file sizes to 100MB each. Also, it’s important to note Instance File Initialization is only for data files, log files are zeroed out due to requirements for crash recovery. We’re going to see that in action in a bit…

DBCC TRACEON(3004,3605,-1)

GO

CREATE DATABASE TestFileZero

GO

EXEC sp_readerrorlog

GO

DROP DATABASE TestFileZero

GO

DBCC TRACEOFF(3004,3605,-1)

Once the database creation is done, stop your strace and let’s go and check out the data gathered in the file.

Poking Around in Linux Internals, Creating the MDF

Inside your output file you’re going to see a collection of system calls. In Linux a system call is the way a user space program can ask the kernel to do some work. And in this case SQL Server on Linux is asking the kernel to create a data file, create a log file and zero out the log file. So let’s check out what happens when the MDF is created.

1630 09:03:28.393592 open(“/var/opt/mssql/data/TestFileZero.mdf”, O_RDWR|O_CREAT|O_EXCL|O_DIRECT, 0660) = 154

First thing, that 1630, that’s the process ID of the thread that’s doing the work here. That PID is different than the one we attached strace to because it’s a thread gets created when we execute our database create statements.

Next, you see a call to

open, it’s opening the file TestFileZero.mdf. The next parameter are flags to tell

open what to do, in this case

O_RDWR opens the file for read/write access,

O_CREAT creates a file,

O_EXCL prevents open from overwriting the file if it exists, and

O_DIRECT enables synchronous I/O to the file and disables the file system cache,

0660 is the file mode and the return value is 164…this is the file descriptor for the file created. A file descriptor (fd) is used to represent and provide access to the file that was just opened. We’ll pass this to other system calls so they can interact with the file addressed by the fd.

1630 09:03:29.087471 fallocate(154, 0, 0, 104857600 = 0

1630 09:03:29.087579 <… fallocate resumed> ) = 0

Next, we see a call to fallocate on the file descriptor 154, the first 0 is the mode, which tells fallocate to allocate disk space within the range specified in the third and forth parameters, offset and length respectively. And here is from 0 to 100MB. If you read the man page for fallocate, there is a FALLOC_FL_ZERO_RANGE flag that can be passed into the mode parameter. In this call, mode is set to 0 so this flag is not set. Further, the man page indicates that this flag is supported on XFS in Linux kernel 3.15. I’m on 3.10 using CentOS 7.4. So there’s no zeroing magic happing at the file system level.

1630 09:03:29.087603 ftruncate(154, 104857600) = 0

Next, there’s a call to ftruncate on fd 154. This call sets the length of the file to the parameter passed in this case 100MB.

1630 09:03:29.091223 io_submit(140030075985920, 1, [{data=0x185b4dc48, pwrite, fildes=154, str=”\1\v\0\0\0\2\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\0\1\0c\0\0\0\2\0\374\37″…, nbytes=8192, offset=8192}]) = 1

Next, we’ll see a sequence of calls using io_submit, which submits asynchronous I/O to disk. The parameters for this one are an aio_context_t, the number of blocks in the request, then an array of AIO control blocks. The AIO control blocks (struct iocb) are what’s inside the brackets [], the key parameters are pwrite and filedes. That’s a write operation and the file descriptor which matches the fd that we’ve been working with, 154. str is the actual data, then the number of bytes in this operation followed by the offset, which is the starting point of the IO.

There are 17 write operations, in my strace output, these are laying out the mdf file, which has a header and other metadata through the file, but it’s certainly not zeroing the file out. We’ll see how zeroing works when we get to the LDF. Here’s a

link to the definition to the IO Control Block (

struct iocb) and more on asynchronous I/O in Linux.

1630 09:03:29.098452 fsync(154

1630 09:03:29.098640 <… fsync resumed> ) = 0

The fsync call instructs the file descriptor to flush all buffers to disk.

1630 09:03:29.099260 close(154) = 0

The

close call closes the file descriptor, releases any locks and allows the file to be reused by other processes.

Poking Around in Linux Internals, Creating and Zeroing the Transaction Log File

So that was the creation of the data file, now let’s check out how the transaction log file is created. Instant File Initialization only applies to data files, transaction log files must be zeroed out for crash recovery. Let’s dig in.

1630 09:03:29.831413 open(“/var/opt/mssql/data/TestFileZero_log.ldf”, O_RDWR|O_CREAT|O_EXCL|O_DIRECT, 0660) = 154

We see an open call again, nearly identical, and the file descriptor returned is again 154.

1630 09:03:30.395757 fallocate(154, 0, 0, 104857600) = 0

There’s a call to fallocate to provision the underlying storage.

1630 09:03:30.395814 ftruncate(154, 104857600) = 0

Then we see a call to truncate again to ensure the size of the file is 100MB.

1630 09:03:30.396466 fsync(154

1630 09:03:30.397672 <… fsync resumed> ) = 0

Then there’s a call to fsync again, flushing buffers to disk.

1630 09:03:30.400042 write(1, “Z”, 1) = 1

1630 09:03:30.400088 write(1, “e”, 1) = 1

1630 09:03:30.400134 write(1, “r”, 1) = 1

1630 09:03:30.400180 write(1, “o”, 1) = 1

1630 09:03:30.400246 write(1, “i”, 1) = 1

1630 09:03:30.400301 write(1, “n”, 1) = 1

1630 09:03:30.400348 write(1, “g”, 1) = 1

…output omitted

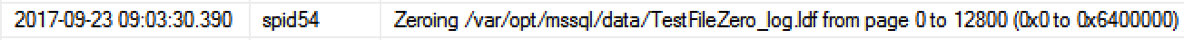

Now things get special…we see a series of write calls. This write call isn’t writing to the file…it’s writing to standard out, as indicated by the first parameter which is 1. 1 is the file descriptor for standard out. The second parameter is the data to be written out, in this case you can see it’s a single character, the third parameter is the size of the data being written. The return value, that’s the last 1 on the line, that’s the number of bytes written to the file. And guess where this data is being sent too…the SQL Server Error log! See the string “Zeroing”? The SQL Server Error Log is written to file and to standard out. Very cool. The fact that it hits standard out and it’s systemd service unit means the SQL Server Error Log also does into journald. Very cool!

1630 09:03:30.406250 io_submit(140030075985920, 1, [{data=0x185b62308, pwritev, fildes=154, iovec=[{“\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300″…, 4096}…], offset=0}]) = 1

…Output omitted

1630 09:03:30.454831 io_submit(140030075985920, 1, [{data=0x185b4dc48, pwritev, fildes=154, iovec=[{“\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300\300″…, 4096}…], offset=100663296}]) = 1

Now for the log file, we’re going to see io_submit calls again, this time MANY more, from the output here it shows the starting offset = 0 and an ending offset = 100663296, there’s many io_submit calls in between. If I did the math correctly, each io_submit writes 4MB of data, this last io_submit call is starting offset is at 96MB, plus that IO then we have our zeroed 100MB log file.

The difference in the time stamps between the first call and the start of the last call 48.581ms. strace’s time stamps are in microseconds.

1630 09:03:30.460172 write(1, “Z”, 1) = 1

1630 09:03:30.460215 write(1, “e”, 1) = 1

1630 09:03:30.460259 write(1, “r”, 1) = 1

1630 09:03:30.460315 write(1, “o”, 1) = 1

1630 09:03:30.460361 write(1, “i”, 1) = 1

1630 09:03:30.460406 write(1, “n”, 1) = 1

1630 09:03:30.460450 write(1, “g”, 1) = 1

When the io_submit calls are finished, we see a series of writes to standard out and the same data is in the SQL Error log and we see that zeroing is finished and it took 60ms…very close to what was reported by strace’s time stamps! There are additional calls from the printing of the start zeroing message to the stop zeroing message that are not included in my output here, plus the time that last IO takes to complete. That’s why there’s a variance in strace’s reported time and SQL Error Log’s reported time.

1630 09:03:30.469088 fsync(154

1630 09:03:30.469532 <… fsync resumed> ) = 0

We call fsync to flush our buffers for this file.

1630 09:03:30.469977 close(154) = 0

Then we close the file.

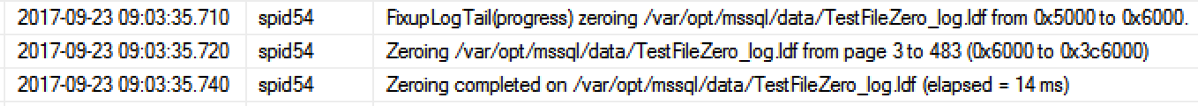

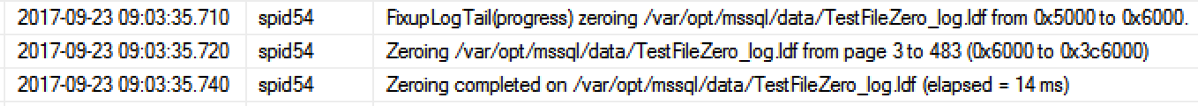

In your strace data, you’ll see another sequence of zeroing in the file for zeroing the tail of the log, here’s the output from the SQL Error Log. The process is similar with the exception of the data. There are some non-zero values being written at the end of the file for crash recovery reasons.

And there we have it, this is how Instant File Initialization works on Linux and some Linux kernel internals for you SQL Pros!