Understanding SQL Server IO Size

This blog post shows you how NTFS stores data, what the NTFS Allocation Unit means, and how SQL Server performs IOs of variable size.

How NTFS Stores Data on Disk

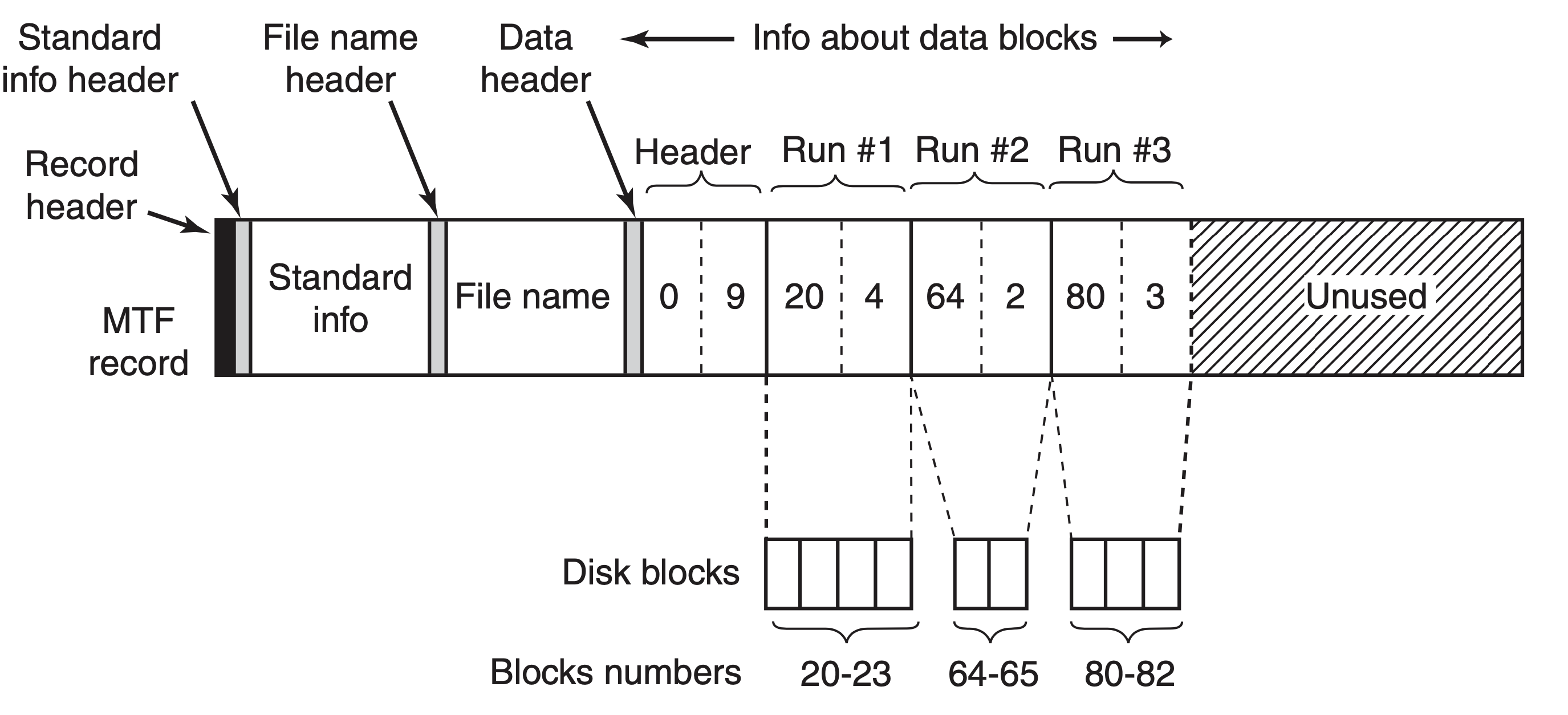

A Master File Table (MFT) is the data structure that describes files and directories on NTFS. In Figure 1, you can see an MTF record has several sections describing the metadata about the file and pointers to blocks that make up the file. A block, also referred to as a cluster in Windows, is an abstraction over one or more physical structures (sectors or pages depending on the media) presented by the underlying disk. A block/cluster is also the atomic allocation unit from a file system and has a configurable size. On NTFS, this is referred to as the NTFS Allocation Unit Size and is a configurable attribute of the file system. By default, it is 4KB and can be as large as 2MB. Since a block is a unit of allocation, if a file is between 1 byte and the file system’s allocation unit size, it will take up exactly one block/cluster on the file system. As the file grows, more blocks/clusters are allocated to represent the file. The MFT data structure tracks which blocks make up a file. The block allocator of the file system will try to ensure blocks are physically adjacent on the disk and groups them together in runs.

A 4KB NTFS Allocation Units size is considered best practice on general-purpose file systems. And 64KB is considered best practice for SQL Server…but why? Let’s keep digging…

Image Source: “Modern Operating Systems” 4th Edition. Andrew Tanenbaum, Herbert Bos.

Why Does SQL Server Care About NTFS Allocation Unit Size?

Inside of SQL Server, there’s also a data structure used for allocation, the Extent. An Extent is a 64KB data structure representing a contiguous sequence of eight 8KB pages. It is often thought that SQL Server performs all disk IO in Extent size operations and that’s why 64KB NTFS Allocation Units are considered best practice. SQL Server does not perform all IOs in Extent sized IOs. The NTFS Allocation unit is the unit of allocation for blocks/clusters in the file system and is composed of ranges of pages or sectors on a disk.

The primary reason why SQL Server cares about NTFS Allocation Unit Size is when a 64KB Extent is created and written to disk, a 64KB NTFS Allocation Unit guarantees that all eight pages inside the Extent are physically written into one NTFS AU and are physically adjacent on disk. This used to be a big deal when disks would spin because the head would have to move and the platter had to rotate to the appropriate sector on disk and then read a range of pages in one disk transaction. But there’s more…let’s keep digging into that concept…

SQL Server Issues IO in Variable Sizes

The size of IOs issued by SQL Server is variable based on the operation performed, IOs can be anywhere from 512 bytes up to 8MB. For more details on the varying IO sizes SQL Server can use check out this post, What is SQL Server’s IO Block Size?. In this post, we’re going to focus on read-ahead reads. Covering each type of IO would require a whole book :)

A read-ahead read in SQL Server works like this, logic inside of SQL Server reads a series of pages from a data file in a single disk transaction as long as the pages read are physically contiguous on the data file. If they are not physically contiguous, the data is returned, and a second disk transaction is issued. Read-ahead reads work great if you’re scanning a table, SQL Server will try to read up to 512KB in a single disk transaction and be able to return more data to you more quickly with fewer round trips to disk and and also prefetching pages for subsequent queries.

Prove It To Me

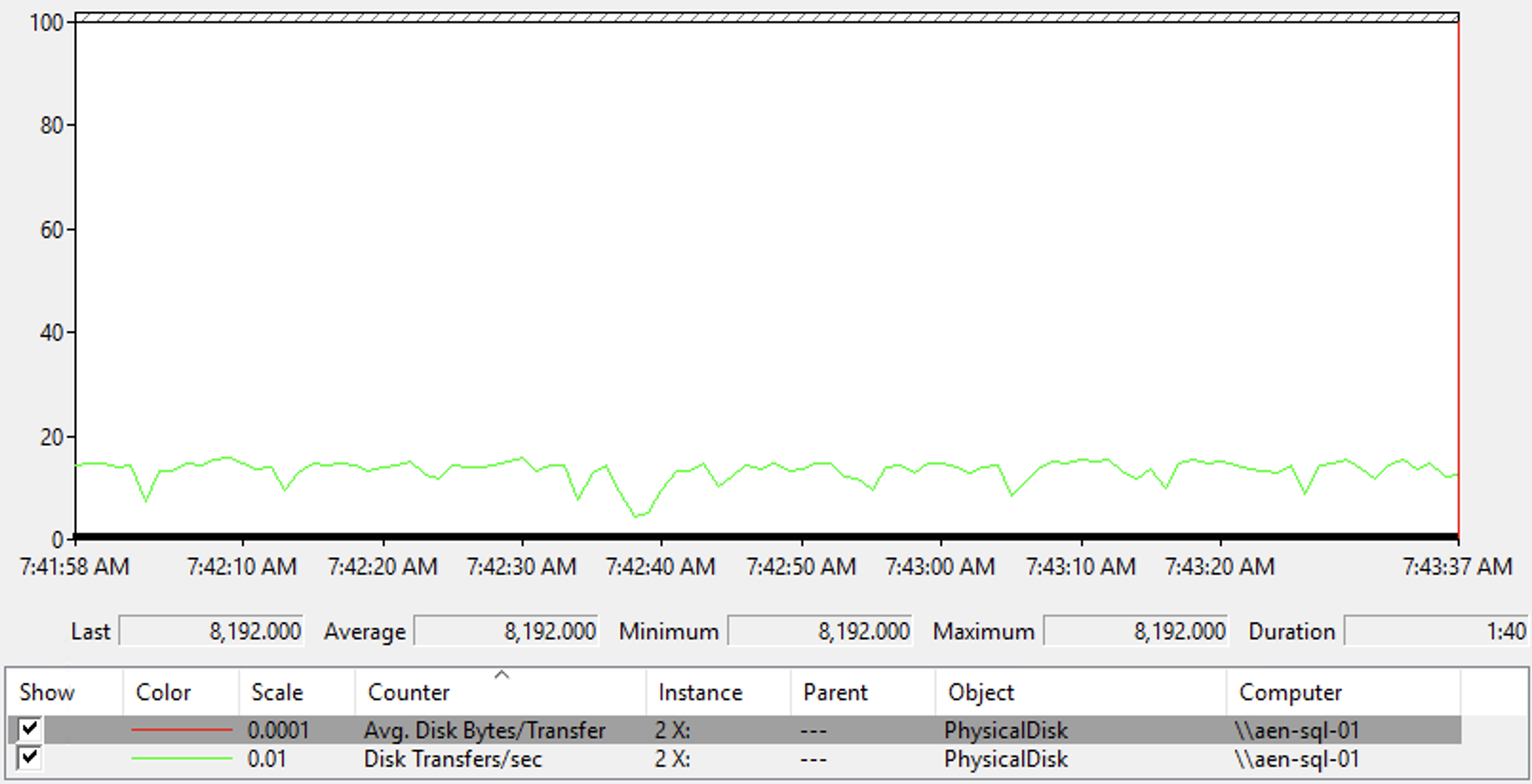

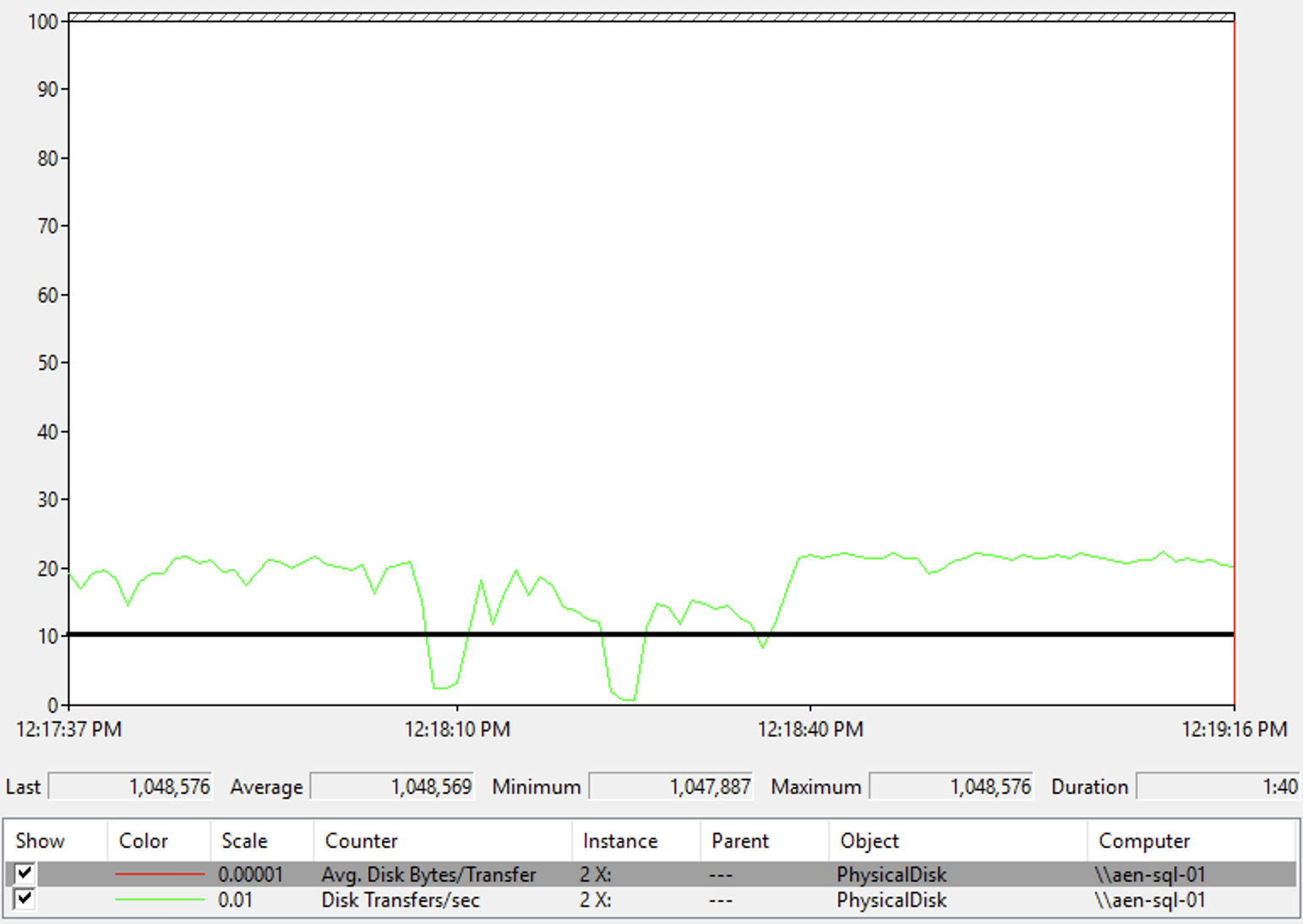

Ok, let’s get started. Open perfmon on a server (you can do this locally or remote) add the counters, Avg. Disk Bytes/Transfer which is IO Size and Disk Transfers/sec which is IOPs. You’ll find them under the PhysicalDisk Object. To reproduce the demo below accurately, try this with a database with a single file on a single volume (In my config below, it’s just on X:). Also, notice the Scale factor in the graphs below; you will need to ensure the Scale matches to reproduce this graph, 0.0001 for Avg. Disk Bytes/Transfer, and 0.01 for Disk Transfers/sec. You can also get IO information from a DMV in SQL Server, see this post Measuring SQL Server File Latency. I also want to call out that the average IO size is dependent upon the workload.

Reading Data from Disk with Read-Ahead Disabled

With Perfmon configured, let’s look at some example IO types in SQL Server, examining their size, and measuring the impact that IO size has on the number of IOs issued. In the code below, I’m performing a table scan. A table scan normally kicks off a read-ahead read. The size of a read-ahead read is variable from 128KB to 512KB based on how the data is laid out on disk. For this first demo, I will disable read-ahead with Trace Flag 652. This tells SQL Server to read data one 8KB page per disk transaction. This isn’t great for performance because SQL Server has to perform a round trip out to storage for each page in the table. Let’s check out the code…

This demo code is based off Bob Ward’s PASS Summit 2014 Session “Inside SQL Server IO”. thanks, Bob!

(Please DO NOT do this on a production server since we’re calling dropcleanbuffers)

DBCC DROPCLEANBUFFERS

GO

USE TPCH100 --change this to your DB

GO

DBCC TRACEON(652,-1)

GO

SELECT * FROM CUSTOMER OPTION (MAXDOP 1) --change this to a large table

GO

DBCC TRACEOFF(652,-1) --be sure to run this after your test to turn read ahead back on

GO

You should see results like this for this test when running this code. Notice values for Last, Average, Minimum, and Maximum are all 8,192,000 which is 8KB. SQL Server is reading exactly one page at a time. SQL Server goes to the location of the data page in the data file, reads that range of data, which is only 8KB, and returns that data in a single disk transaction. Also notice that the number of IOs is hovering around 1,800 per second for the duration of the test.

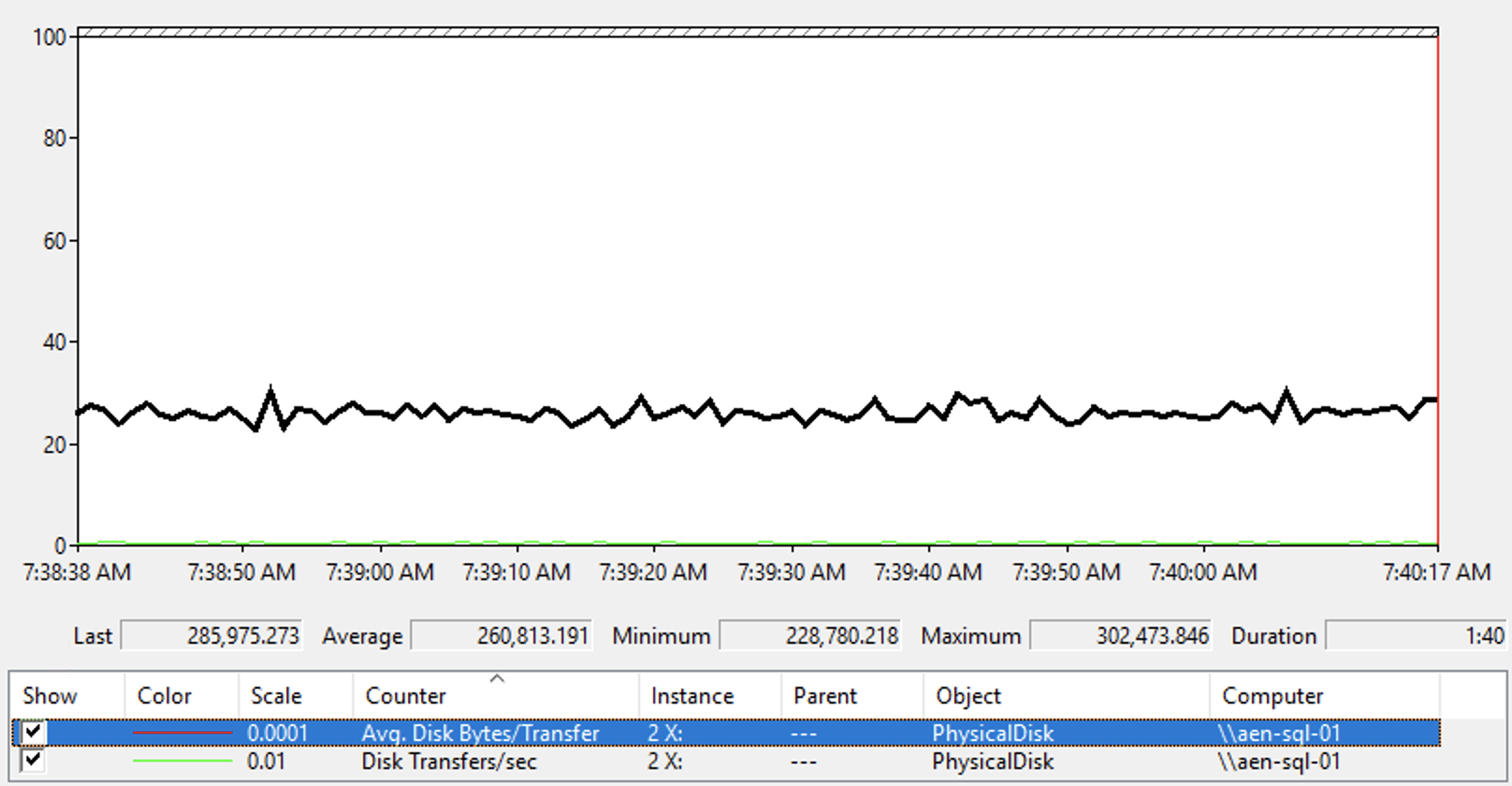

Reading Data from Disk with Read-Ahead Enabled

Let’s check out another IO pattern. let’s run a table scan that kicks off a read-ahead read. (Ensure that TF652 is off for this test) As we learned earlier, the size of a read-ahead read is variable from 128KB to 512KB based on how the data is laid out on disk. Here’s the code for this test.

(Again, please DO NOT do this on a production server since we’re calling dropcleanbuffers)

DBCC DROPCLEANBUFFERS

GO

USE TPCH100 --change this to your DB

GO

SELECT * FROM CUSTOMER OPTION (MAXDOP 1) --change this to a large table

GO

In the chart below black line shows average IO sizes (Avg. Disk Bytes/Transfer). Each IO is much larger than the previous test, and there is variance in IO Size. This is due to read-ahead trying to read runs of data, and when the read IO hits a fragment (non-contiguous page) on disk or hits 512KB, the IO returns the data from the disk. We also see the values Last ~= 279KB, Avgerage ~= 254KB, Minimum ~= 223KB, and Maximum = 295KB. These are the actual sizes of the disk transactions performed by the read. The other thing you’ll notice is that Disk transfers/Sec goes way down because SQL Server is reading the data in larger chunks and thus reducing the number of round trips to the disk device. Keep in mind the perfmon counter Avg. Disk Bytes/Transfer is the average size of an IO in a sampling period. There’s an extended event that will give you the exact size of each IO. Check out the post Dissecting SQL Server physical reads with Extended Events and Process monitor by Tim Chapman for more details.

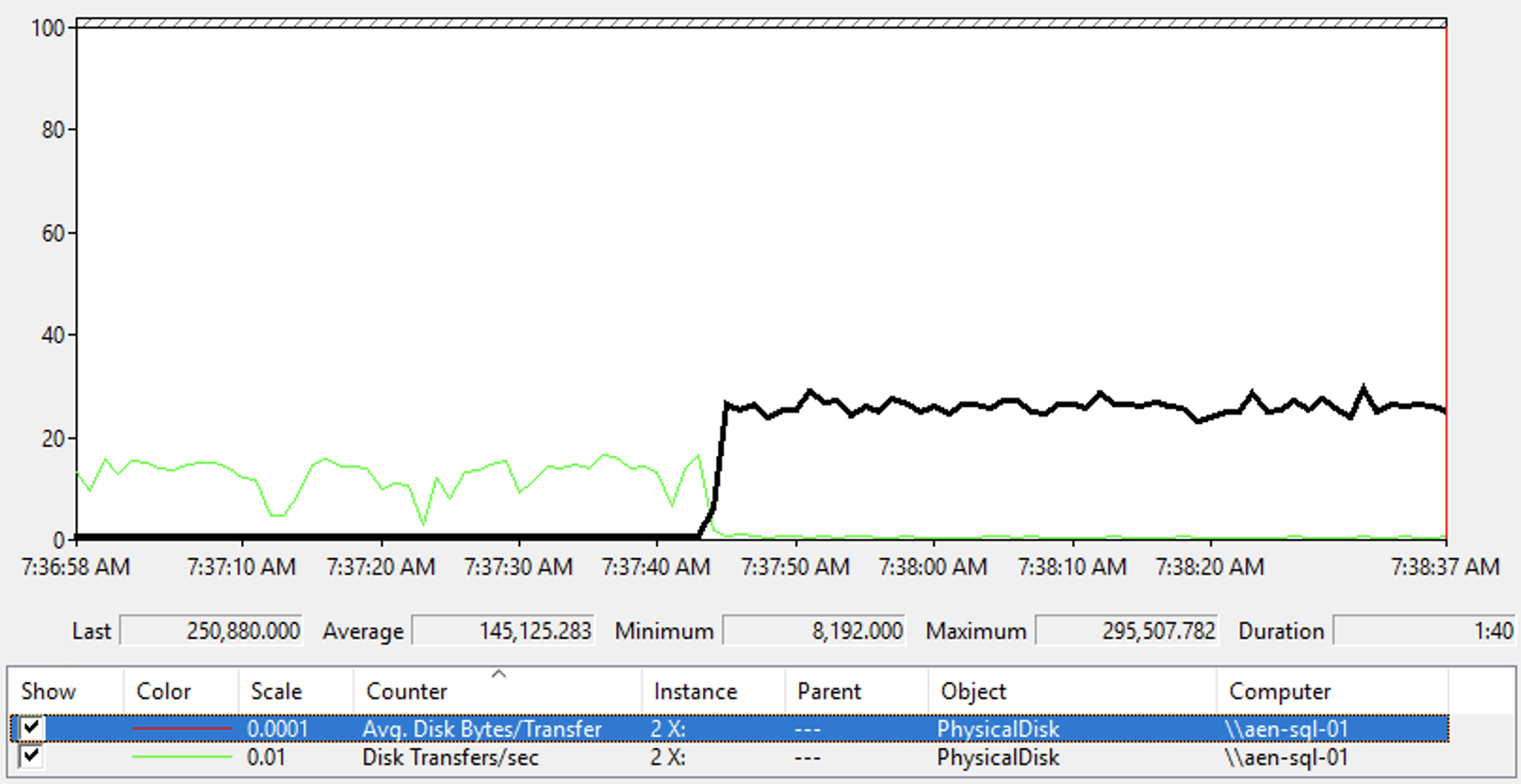

Let’s look at this from another angle. In the chart below, I started a workload with Trace Flag 652 enabled, disabling read-ahead read, causing SQL Server to issue 8KB reads per disk transaction. Then at 7:37:42AM, I disabled Trace Flag 652, enabling read-ahead reads. The chart is quite telling. The black line is Avg. Disk Bytes/Transfer and when read-ahead kicks in SQL Server starts performing larger IOs and performs far fewer disk transactions (Disk transfers/Sec) because each IO reads more data from disk, reducing the number of round trips to disk. This is why read ahead is important, you get more data from the disk into SQL Server faster and with fewer IOs.

Backups

Let’s try this test again with another IO type, this time backups. Backup IOs, when using the default configuration, read 1MB per IO. The chart below shows that the IO size for Last, Average, Minimum, and Maximum are all 1,048,576, which is 1MB. Note the scaling factor on this chart is 0.00001.

Summary

In this post, I showed you that SQL Server performs IOs in variable size based on the type of IO performed and that IO size is independent of NTFS Allocation Unit Size. So when you’re talking with your storage team or storage vendor, make sure that you’re using average IO size (Avg. Disk Bytes/Transfer) and the number of Disk transfers/Sec (IOPs) because those numbers together are what matter.